Evolutionary surprise, artificial intelligence, and H5N8

A story about what happens when we push machine learning to its outer limits.

Dr. Colin Carlson (Georgetown University)

This year, something happened that’s never happened before.

On February 18, Russia sent a memo to the World Health Organization telling the world about seven clinical cases of influenza in poultry farm workers. The notification came only a couple months after a massive poultry die-off on the farm: in the first week of December, over 100,000 chickens (out of 900,000) died, leading to an outbreak investigation. All of this is fairly normal: influenza is constantly circulating in wild birds, poultry, and humans, and jumps between them constantly. This outbreak was special, though, because for the first time, H5N8 influenza had made the jump to humans.

Influenza A strains are named based on two proteins: hemagglutinin (H) and neuraminidase (N). There are a finite set of shapes and structures to both of these proteins, and we name flu lineages based on the different combinations we see in a viral genome. In wild birds, highly pathogenetic avian influenza is usually either H5 or H7. Sometimes, we see H5N1 jump to humans, but these viruses are usually not transmissible between people. But up to this point, we had never seen H5N8 successfully infect a human host.

That poses a really interesting question for our team: what happens to our models when we see something that’s never been seen before?

The better ecologists get at prediction, the more they’ve been trying to develop language around prediction that lets us communicate about uncertainty.

Uncertainty comes from many places. Data are usually an incomplete representation of reality, filtered through a sampling process that is both partial and often biased; both of those add uncertainty to models. Models are also trained on finite datasets, and usually harness a bit of stochasticity to make their inference more powerful, but that adds uncertainty to the final result. All of these kinds of uncertainty are hard enough to distinguish, but they’re all easier to grapple with than another, more nebulous kind: sometimes, our baseline concept of reality changes, and so the data no longer describe reality.

This is where ecological surprise comes in. Karen Filbee-Dexter and colleagues define ecological surprise as:

…as a situation where human expectations or predictions of natural system behavior deviate from observed ecosystem behavior. This can occur when people (1) fail to anticipate change in ecosystems; (2) fail to influence ecosystem behavior as intended; or (3) discover something about an ecosystem that runs counter to accepted knowledge.

The idea of ecological surprise gives us useful language to talk about what happens when we find something we can’t understand - or, when we make predictions, and reality breaks from them in a massive way. Sure, there are things we can do to avoid being blindsided, like using more long-term comprehensive datasets, or engaging more in experimental-model feedbacks. But to some degree, surprise is an inevitable part of studying complex systems.

Knowing that puts us in an uncomfortable place as modelers. It’s easy enough to dismiss prediction as an exercise entirely based on the possibility of surprise (and, any modeler who has ever sent a paper to peer review has probably seen that criticism leveraged by usually-skeptical experimentalists). But it’s harder to look at our work and think critically about where solid ground stops and surprise might be possible - and then, to communicate that uncertainty clearly and carefully.

This is a big problem for research forecasting disease emergence, a process that isn’t just ecological and social, but also evolutionary. Though it leans into our anthropomorphic worse natures to say it, viruses are clever: they constantly develop new ways of infecting and harming hosts we’ve never seen before. Their unparalleled rate of evolution lets them explore the space of biological possibility much faster than most life on Earth, and - from the vantage point of human health - that can be a problem. (When the outcome variable is human mortality, an evolutionary surprise is almost always unwelcome.)

When virologists express skepticism of efforts to predict disease emergence, they’re almost always talking about the possibility of evolutionary surprise. Most of the evolutionary diversity of viruses is still unsampled; we only know about 1% of the global virome today, and even the best-studied viruses harbor undiscovered diversity (e.g., the influenza-like viruses that are increasingly being discovered in basal vertebrates). And maybe more importantly, the global virome isn’t a static uncharted territory; it’s constantly evolving and expanding, and familiar viruses are constantly developing new adaptations that let them cross species barriers.

And that brings us back to flu.

One of our team’s biggest interests is zoonotic risk technology: models that look at a viral genome (plus maybe its ecology or structure) and try to guess whether it could infect humans. Those models are starting to work at the scale of the global virome, but data is a bit limiting at those scales. It’s easier to run these models for a system like influenza, where some strains show zoonotic potential and others don’t. Thanks to databases like GISAID and the Influenza Research Database, we have access to tremendous quantities of data: millions of viral genomes, packaged in a format ready to power our models. Various researchers have used these genomes to try to predict which influenza viruses could infect humans.

There’s a conceptual challenge that sits at the heart of these models, though, which we often find ourselves debating on our team. It’s maybe easiest to explain as a set of small, interlinked thoughts:

Some animal viruses could infect humans if they had the opportunity, but haven’t yet. Others can’t.

That zoonotic potential exists as an intangible property of these viruses in nature. It can be demonstrated experimentally, but no one is doing those experiments at scale. So we can’t generate a dataset of animal viruses sorted by zoonotic potential.

What we have access to are data on human-infective viruses and animal-infective viruses. We ask machine learning models to distinguish the two, and apply this to the animal-infective viruses to ask which look most like human-infective viruses.

These models could be making actual inference (learning why some viruses can infect humans while others can’t), but might also just be succeeding at classification (telling apart human and animal viruses by reiterating patterns in the data).

There’s no way to tell the two apart just by looking. We can use training-test splits, but our training and test data are generated by the same sampling process, so are inherently non-independent.

A model that makes actual inferences might allow us to anticipate evolutionary surprises. A model that’s just a successful classifier will be incredibly vulnerable to them.

Or, to put this another way: those models might be very good at telling apart human H5N1 from animal H5N1, but that might be all they’re good at, and they might only be good at telling apart lineages that have emerged so far. They wouldn’t be ready to handle, say, the first human-infective H5N8 - because none of these models have ever seen a human-infective H5N8 before. They’ve seen avian H5N8, and have been told that every virus that looks like H5N8 is an avian virus. Because we’ve seen a human-infective H5N8, we know now that some of those avian lineages apparently had zoonotic potential - but the model still doesn’t know that.

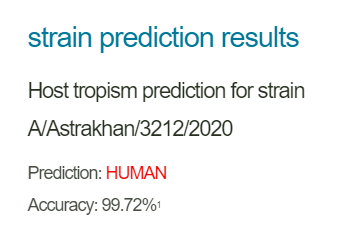

So what actually happens if we run the model on the new H5N8?

Thanks to the miracle of data sharing that is the global influenza surveillance system, you can download* the full genome sequence of the novel H5N8 influenza A/Astrakhan/3212/2020 from GISAID. I downloaded it, and used the NCBI flu annotation tool** to correctly divide the proteins into the format used by FluLeap, a web platform that applies Christine Eng et al.’s zoonotic risk technology for influenza sequence data. FluLeap takes each protein sequence, plus the combined genome, and runs them through a random forest model that classifies the sequence as either “avian” or “human”. It’s intuitive and easy to use - give it a whirl.

FluLeap has a static training and test dataset, which was compiled a few years back. The model has never seen a human-infective H5N8 virus. Which makes it all the more surprising when it says:

Like most of the recently-emerged human strains in the original Eng studies, the predicted protein classifications are a mix of avian and human - a signal that these viruses look a lot more like their avian counterparts than most human lineages, and maybe haven’t had a chance to adapt to humans much yet. Six proteins come out avian in the final model (HA, M1, NA, NP, NS2, and PA), while five come out human (M2, NS1, PB1, PB1-F2, and PB2).

It’s particularly notable that both the hemagglutinin and neuraminidase proteins are classified as avian. That makes sense: the virus has seen other H5N8 avian viruses, it knows what those particular proteins look like, and when it sees them, it says “looks avian to me!” But that should make it all the more surprising that the model predictions are as mixed as they are - and that the total prediction comes out human.

In a blog post from a couple years back, prominent influenza virologist Ian Mackay wrote about the need to start looking beyond the H and N genes:

We also know that mutations in other flu virus strands can have a range of effects on the new variant viruses that result. But we don’t watch those other genes to the same extent that we watch H and N. It might be time to dif that more than we have. We have the tools. There are questions that might be answered if we consider the whole virus and not just some of its parts

The unusual result here underscores this point. When we look beyond the H and N sequences, the model is able to identify the signature of human adaptation and/or compatibility with a human host, and correctly identify this as a post-emergence virus. Which means that maybe when we look at those genes in the H5N8 influenza circulating in birds, we might be able to identify lineages that have the same potential.

But, it could have turned out differently. Before we actually ran the model, there was no way to know just how far it could extend into this evolutionary space. In another iteration of the same model, with the same data, we might’ve gotten a different stochastic outcome. And, it could still turn out differently from here; there’s no guarantee this would work correctly for:

Identifying avian H5N8 lineages with zoonotic potential

Future H5N8 emergence events in humans

Other novel HXNX combinations in humans

There are those who would look at that uncertainty and rule out the value of the entire exercise - or even argue strongly against the use of these technologies in applied settings. But I arrive at a slightly different conclusion.

Evolutionary surprise is an opportunity to push the limits of what we know, and break the tools we make, over and over, until they become stronger - until we can tell a model that does handle them with grace apart from one that crumbles. In our own betacoronavirus work, we’re learning that eight predictive models (all of which perform well on training-test splits) have radically different rates of success in predicting newly-discovered hosts; only 2-3 of those models actually perform substantially better than random. But they all have a common feature (they use traits characterizing bat ecology and evolution) that lets us improve future models, and make the right choices when building these tools.

We need to do the same for zoonotic risk technology: surprise, break, improve, repeat. And then, when we present these tools - when we put them in the hands of field surveillance teams, global pandemic monitoring systems, or even just other computational researchers - we need to be clear about the possibility of evolutionary surprise. It’s not a reason not to do the work: it’s the horizon we’re chasing.

* Minus some browser specific bugs.

** Tremendous thanks to Eneida Hatcher of NCBI for help with the alignments!